It’s all anyone can talk about. In classrooms, boardrooms, on the nightly news, and around the dinner table, artificial intelligence (AI) is dominating conversations. With the passion everyone is debating, celebrating, and villainizing AI, you’d think it was a completely new technology; however, AI has been around in various forms for decades. Only now is it accessible to everyday people like you and me.

The most famous of these mainstream AI tools are ChatGPT, DALL-E, and Bard, among others. The specific technology that links these tools is called generative artificial intelligence. Sometimes shortened to gen AI, you’re likely to have heard this term in the same sentence as deepfake, AI art, and ChatGPT. But how does the technology work?

Here’s a simple explanation of how generative AI powers many of today’s famous (or infamous) AI tools.

What Is Generative AI?

Generative AI is the specific type of artificial intelligence that powers many of the AI tools available today in the pockets of the public. The “G” in ChatGPT stands for generative. Today’s Gen AI’s evolved from the use of chatbots in the 1960s. Now, as AI and related technologies like deep learning and machine learning have evolved, generative AI can answer prompts and create text, art, videos, and even simulate convincing human voices.

How Does Generative AI Work?

Think of generative AI as a sponge that desperately wants to delight the users who ask it questions.

First, a gen AI model begins with a massive information deposit. Gen AI can soak up huge amounts of data. For instance, ChatGPT is trained on 300 billion words and hundreds of megabytes worth of facts. The AI will remember every piece of information that is fed into it. Additionally, it will use those nuggets of knowledge to inform any answer it spits out.

From there, a generative adversarial network (GAN) algorithm constantly competes with itself within the gen AI model. This means that the AI will try to outdo itself to produce an answer it believes is the most accurate. The more information and queries it answers, the “smarter” the AI becomes.

Google’s content generation tool, Bard is a great way to illustrate generative AI in action. Bard is based on gen AI and large language models. It’s trained in all types of literature and when asked to write a short story, it does so by finding language patterns and composing by choosing words that most often follow the one preceding it. In a 60 Minutes segment, Bard composed an eloquent short story that nearly brought the presenter to tears, but its composition was an exercise in patterns, not a display of understanding human emotions. So, while the technology is certainly smart, it’s not exactly creative.

How to Use Generative AI Responsibly

The major debates surrounding generative AI usually deal with how to use gen AI-powered tools for good. For instance, ChatGPT can be an excellent outlining partner if you’re writing an essay or completing a task at work; however, it’s irresponsible and is considered cheating if a student or an employee submits ChatGPT-written content word for word as their own work. If you do decide to use ChatGPT, it’s best to be transparent that it helped you with your assignment. Cite it as a source and make sure to double-check your work!

One lawyer got in serious trouble when he trusted ChatGPT to write an entire brief and then didn’t take the time to edit its output. It turns out that much of the content was incorrect and cited sources that didn’t exist. This is a phenomenon known as an AI hallucination, meaning the program fabricated a response instead of admitting that it didn’t know the answer to the prompt.

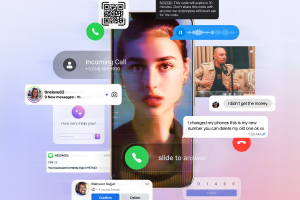

Deepfake and voice simulation technology supported by generative AI are other applications that people must use responsibly and with transparency. Deepfake and AI voices are gaining popularity in viral videos and on social media. Posters use the technology in funny skits poking fun at celebrities, politicians, and other public figures. However, to avoid confusing the public and possibly spurring fake news reports, these comedians have a responsibility to add a disclaimer that the real person was not involved in the skit. Fake news reports can spread with the speed and ferocity of wildfire.

The widespread use of generative AI doesn’t necessarily mean the internet is a less authentic or a riskier place. It just means that people must use sound judgment and hone their radar for identifying malicious AI-generated content. Generative AI is an incredible technology. When used responsibly, it can add great color, humor, or a different perspective to written, visual, and audio content.

It’s all anyone can talk about. In classrooms, boardrooms, on the nightly news, and around the dinner table, artificial intelligence (AI) is dominating conversations. With the passion everyone is debating, celebrating, and villainizing AI, you’d think it was a completely new technology; however, AI has been around in various forms for decades. Only now is it accessible to everyday people like you and me.

The most famous of these mainstream AI tools are ChatGPT, DALL-E, and Bard, among others. The specific technology that links these tools is called generative artificial intelligence. Sometimes shortened to gen AI, you’re likely to have heard this term in the same sentence as deepfake, AI art, and ChatGPT. But how does the technology work?

Here’s a simple explanation of how generative AI powers many of today’s famous (or infamous) AI tools.

What Is Generative AI?

Generative AI is the specific type of artificial intelligence that powers many of the AI tools available today in the pockets of the public. The “G” in ChatGPT stands for generative. Today’s Gen AI’s evolved from the use of chatbots in the 1960s. Now, as AI and related technologies like deep learning and machine learning have evolved, generative AI can answer prompts and create text, art, videos, and even simulate convincing human voices.

How Does Generative AI Work?

Think of generative AI as a sponge that desperately wants to delight the users who ask it questions.

First, a gen AI model begins with a massive information deposit. Gen AI can soak up huge amounts of data. For instance, ChatGPT is trained on 300 billion words and hundreds of megabytes worth of facts. The AI will remember every piece of information that is fed into it. Additionally, it will use those nuggets of knowledge to inform any answer it spits out.

From there, a generative adversarial network (GAN) algorithm constantly competes with itself within the gen AI model. This means that the AI will try to outdo itself to produce an answer it believes is the most accurate. The more information and queries it answers, the “smarter” the AI becomes.

Google’s content generation tool, Bard is a great way to illustrate generative AI in action. Bard is based on gen AI and large language models. It’s trained in all types of literature and when asked to write a short story, it does so by finding language patterns and composing by choosing words that most often follow the one preceding it. In a 60 Minutes segment, Bard composed an eloquent short story that nearly brought the presenter to tears, but its composition was an exercise in patterns, not a display of understanding human emotions. So, while the technology is certainly smart, it’s not exactly creative.

How to Use Generative AI Responsibly

The major debates surrounding generative AI usually deal with how to use gen AI-powered tools for good. For instance, ChatGPT can be an excellent outlining partner if you’re writing an essay or completing a task at work; however, it’s irresponsible and is considered cheating if a student or an employee submits ChatGPT-written content word for word as their own work. If you do decide to use ChatGPT, it’s best to be transparent that it helped you with your assignment. Cite it as a source and make sure to double-check your work!

One lawyer got in serious trouble when he trusted ChatGPT to write an entire brief and then didn’t take the time to edit its output. It turns out that much of the content was incorrect and cited sources that didn’t exist. This is a phenomenon known as an AI hallucination, meaning the program fabricated a response instead of admitting that it didn’t know the answer to the prompt.

Deepfake and voice simulation technology supported by generative AI are other applications that people must use responsibly and with transparency. Deepfake and AI voices are gaining popularity in viral videos and on social media. Posters use the technology in funny skits poking fun at celebrities, politicians, and other public figures. However, to avoid confusing the public and possibly spurring fake news reports, these comedians have a responsibility to add a disclaimer that the real person was not involved in the skit. Fake news reports can spread with the speed and ferocity of wildfire.

The widespread use of generative AI doesn’t necessarily mean the internet is a less authentic or a riskier place. It just means that people must use sound judgment and hone their radar for identifying malicious AI-generated content. Generative AI is an incredible technology. When used responsibly, it can add great color, humor, or a different perspective to written, visual, and audio content.

Technology can also help protect against voice cloning attacks. Tools like McAfee Deepfake Detector, aim to detect AI-generated deepfakes, including audio-based clones. Stay informed about advancements in security technology and consider utilizing such tools to bolster your defenses.